In 2022, we announced the deprecation of LUIS by September 30, 2025 with a recommendation to migrate to conversational language understanding (CLU). In response to feedback from our valued customers, we have decided to extend the availability of certain functionalities in LUIS until March 31, 2026. This extension aims to support our customers in their smooth migration to CLU, ensuring minimal disruption to their operations.

Extension Details

Here are some details on when and how the LUIS functionality will change:

October 2022: LUIS resource creation is no longer available.

October 31, 2025:

The LUIS portal will no longer be available.

LUIS Authoring (via REST API only) will continue to be available.

March 31, 2026:

LUIS Authoring, including via REST API, will no longer be available.

LUIS Runtime will no longer be available.

Before these retirement dates, please migrate to conversational language understanding (CLU), a capability of Azure AI Service for Language. CLU provides many of the same capabilities as LUIS, plus enhancements such as:

Enhanced AI quality using state-of-the-art machine learning models

The LLM-powered Quick Deploy feature to deploy a CLU model with no training

Multilingual capabilities that allow you to train in one language and predict in 99+ others

Built-in routing between conversational language understanding and custom question answering projects using orchestration workflow

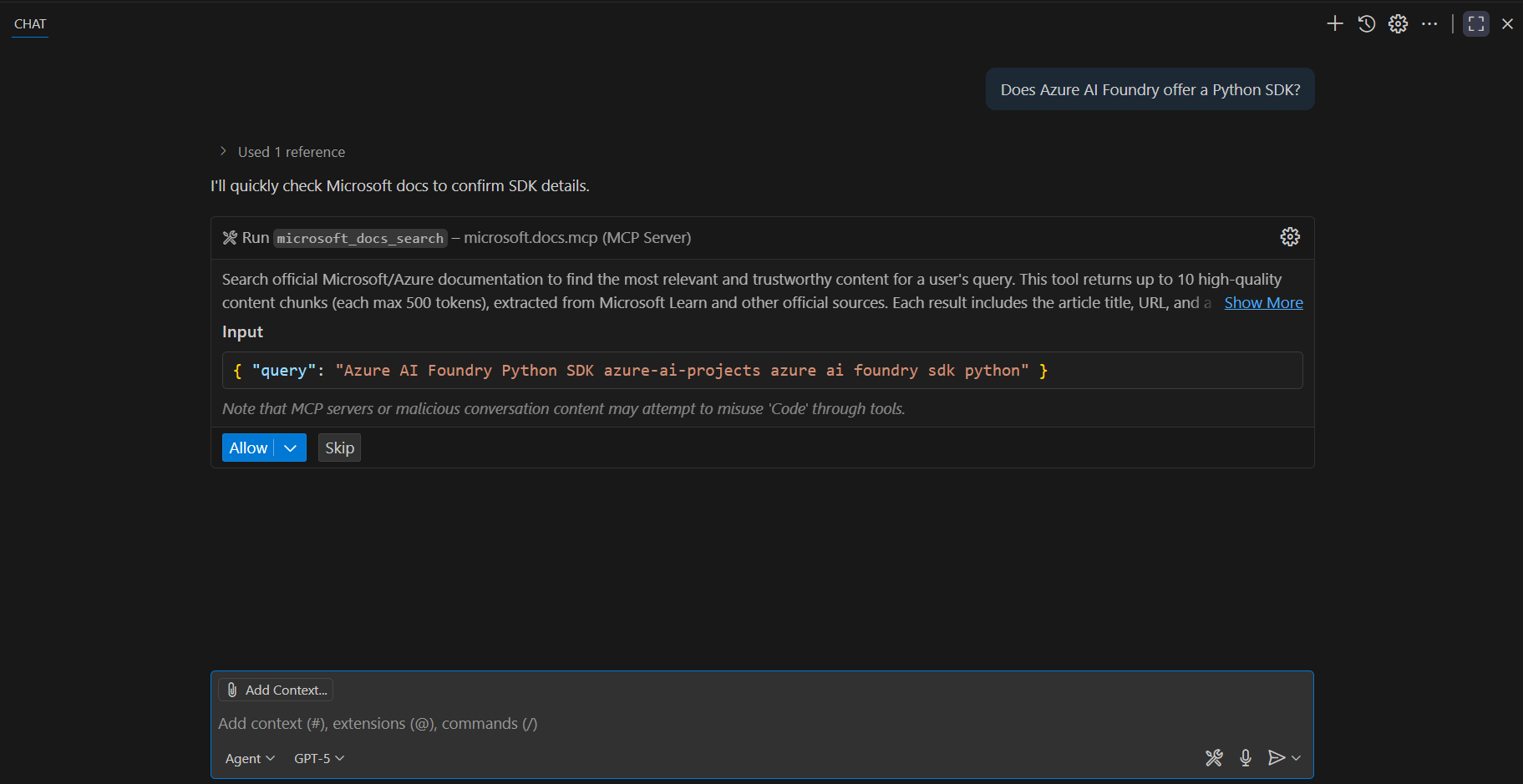

Access to a suite of features available on Azure AI Service for Language in the Azure AI Foundry

Looking Ahead

On March 31, 2026, LUIS will be fully deprecated, and any LUIS inferencing requests will return an error message. We encourage all our customers to complete their migration to CLU as soon as possible to avoid any disruptions.

We appreciate your understanding and cooperation as we work together to ensure a smooth migration.

Thank you for your continued support and trust in our services.

( 21

min )

Table of Contents

Abstract

The Enterprise Challenge: Storage Complexity at Scale

Introducing the Azure NetApp Files VS Code Extension

Technical Architecture: Building for Developer Productivity

Intelligent Interface Layer

Template Generation Engine

Azure Integration Framework

Azure NetApp Files VS Code Extension – High-Level Architecture overview

Real-World Impact: Measurable Business Value

Productivity Gains

Cost Optimization

Operational Excellence

Example Scenario: Enterprise Development Team

The Challenge

The Solution

Expected Results

Key Capabilities for Enterprise Teams

Template Generation and Standardization

Multi-subscription support

Developer-centric workflow

Getting Started: Enterprise Deployment Guide

Prerequisites

Extension Deployment using UI

Post-Installation Verification

Conc…

( 44

min )

Table of Contents

Abstract

The Enterprise Challenge: Storage Complexity at Scale

Introducing the Azure NetApp Files VS Code Extension

Technical Architecture: Building for Developer Productivity

Intelligent Interface Layer

Template Generation Engine

Azure Integration Framework

Azure NetApp Files VS Code Extension – High-Level Architecture overview

Real-World Impact: Measurable Business Value

Productivity Gains

Cost Optimization

Operational Excellence

Example Scenario: Enterprise Development Team

The Challenge

The Solution

Expected Results

Key Capabilities for Enterprise Teams

Template Generation and Standardization

Multi-subscription support

Developer-centric workflow

Getting Started: Enterprise Deployment Guide

Prerequisites

Extension Deployment using UI

Post-Installation Verification

Conc…

( 44

min )